User testing is a test that will allow you to gain a deeper understanding of the users of your applications and products. Mastering it can be a turning point in everything you do. Below, we explain everything you need to know about this innovative technique, and if you’re looking to increase your revenue, you can read on to learn a little about how companies use this methodology to improve their products.

What is User Testing?

User testing is defined as a process in product development and design that involves gathering user feedback and evaluating the user experience of a software application, website, mobile app, or physical device.

User testing is conducted by having real sample users from the target audience interact with the product while researchers or designers observe their actions, behaviors, and feedback.

During user testing, participants are asked to perform specific tasks or interact with the product while researchers carefully observe and record their actions, comments, and feedback. This process aims to uncover potential usability issues, such as confusing navigation, unclear instructions, or frustrating interactions, that may hinder users from achieving their goals efficiently.

Key Components of User Testing

Key Benefits of User Testing

User Testing Examples

User testing helps uncover usability issues and improve the overall user experience. Below are detailed examples of user testing scenarios for different types of products and services, illustrating how real users might interact with them.

Example 1. E-commerce Website Checkout Process:

- Scenario: Imagine you are an online shopper looking to purchase a product from an e-commerce website. Your goal is to find a specific item, add it to your cart, and complete the checkout process.

- Testing: During user testing, participants would be asked to perform this task while researchers observe their actions and gather feedback. Researchers might track the time it takes to complete the task, note any difficulties encountered, and ask users to share their thoughts about the process.

Learn more: E-commerce Testing Tools

Example 2. Mobile App Navigation:

- Scenario: Users want to explore and find specific features or information within a mobile app, such as a travel booking app or a social media platform.

- Testing: Test participants are given specific tasks, such as finding and booking a flight or posting a status update. Researchers observe how easily users can navigate the app, locate relevant features, and complete tasks. They also take note of any user frustration or confusion.

Learn more: What is Mobile UX?

Example 3. Online Form Completion:

- Scenario: Users need to fill out an online form, such as a registration form for a website or an application.

- Testing: Participants are asked to complete the form while researchers monitor their progress. Researchers pay attention to factors like the clarity of form fields, the appropriateness of error messages, and the overall flow of the process. Users’ ability to complete the form accurately and efficiently is assessed.

Example 4. Software Application Feature Testing:

- Scenario: Users are required to perform specific actions or tasks within a software application, like editing a document using a word processing program.

- Testing: Test participants are given a set of tasks to complete within the application. Researchers observe how users interact with the software, track their success rates, and gather feedback on the ease of use, intuitiveness of features, and any challenges faced during the tasks.

Example 5. Website Content Discovery:

- Scenario: Users are tasked with finding specific information on a website, such as a product description, contact details, or a particular article.

- Testing: During this test, participants are given the information they need to find and asked to locate it on the website. Researchers watch their navigation choices, monitor the time it takes to find the information, and gather feedback on the website’s organization and search functionality.

Learn more: Website Usability Testing Tools

Example 6. Mobile App Onboarding Process:

- Scenario: New users have just downloaded a mobile app and are going through the initial onboarding screens and setup.

- Testing: Participants are asked to go through the app’s onboarding process, including signing up or logging in, configuring preferences, and understanding key features. Researchers assess how easily users can complete these initial steps and whether they encounter any confusion or frustration.

Learn more: What is Mobile Usability Testing?

Example 7. Voice Assistant Interaction Testing:

- Scenario: Users are asked to interact with a voice-controlled virtual assistant (e.g., Siri, Alexa, Google Assistant) to perform tasks like setting reminders, sending messages, or retrieving information.

- Testing: Researchers observe how accurately the virtual assistant understands user commands and how effectively it responds. They also assess the overall user experience and whether users encounter any limitations or misunderstandings.

Example 8. Video Game User Experience Testing:

- Scenario: Gamers are tasked with playing a video game and providing feedback on the game’s controls, graphics, gameplay mechanics, and overall enjoyment.

- Testing: Participants play the game while researchers monitor their gameplay experience. Feedback is gathered on aspects like difficulty levels, user interface design, and any bugs or glitches encountered.

Example 9. Mobile Banking App Transaction Testing:

- Scenario: Users are given a set of financial tasks to complete in a mobile banking app, such as transferring funds, paying bills, or checking account balances.

- Testing: Participants navigate the banking app to perform the assigned tasks. Researchers assess the ease of use, security features, and overall user satisfaction. They may also gather feedback on the clarity of transaction records.

Example 10. Wearable Fitness Tracker Usage Testing:

- Scenario: Users are provided with a wearable fitness tracker (e.g., smartwatch) and asked to track their daily physical activity, heart rate, and sleep patterns.

- Testing: Participants wear the device and use its associated app to monitor their health data. Researchers collect feedback on the accuracy of data, ease of syncing, and whether users find the tracker motivating or useful for fitness goals.

These diverse examples showcase the versatility of user testing across various domains, from digital products like apps and websites to hardware devices and interactive systems. User testing remains valuable for assessing usability, functionality, and user satisfaction, allowing organizations to refine their products and services to better meet user needs.

Learn more: What is Digital Experience Design?

Types of User Testing

User testing comes in many forms, each designed to uncover different insights about a product’s usability, functionality, and overall user experience. Below are the most common types of user testing, along with their key purposes, methods, and ideal use cases.

There are several types of user testing, each with its own specific focus and purpose.

- Purpose of usability testing is to evaluate the overall usability and user-friendliness of a product.

- Process: Participants perform specific tasks while researchers observe their interactions, record their actions and feedback, and assess the ease of use.

- Focus area: Identifying usability issues, navigation problems, and user interface improvements.

2. Explorative Testing:

- Purpose of explorative testing is to explore user behaviors and preferences in an open-ended way.

- Process: Participants are given minimal guidance and are observed as they explore a product or website freely.

- Focus area: Understanding how users naturally interact with a product without predefined tasks.

3. A/B Testing:

- Purpose of A/B testing is to compare two or more versions of a product or feature to determine which one performs better.

- Process: Different user groups are presented with different versions, and their interactions are compared to assess performance and user preferences.

- Focus: Identifying which design or feature variation yields better outcomes, such as higher click-through rates or conversions.

- Purpose of accessibility testing is to evaluate a product’s accessibility and compliance with accessibility standards (e.g., WCAG) for users with disabilities.

- Process: Participants with disabilities (e.g., visual impairments, motor impairments) interact with the product using assistive technologies.

- Focus: Identifying barriers and ensuring that the product is inclusive and usable for all users.

5. Beta Testing:

- Purpose of beta testing is to test a pre-release version of a product with a broader audience to identify bugs, gather feedback, and ensure stability before the official launch.

- Process: Real users use the product in their real-world environments and provide feedback on their experiences.

- Focus: Validating the product’s readiness for a wider user base and addressing critical issues before launch.

6. Remote Testing:

- Purpose of remote testing is to conduct user testing with participants who are geographically distant from the testing team.

- Process: Participants use the product remotely, and researchers collect data through video conferencing, screen sharing, or specialized testing platforms.

- Focus: Overcoming geographical constraints to gather insights from a diverse user base.

7. Comparative Testing:

- Purpose of comparative testing is to compare a product with competitors or similar products in the market.

- Process: Users are asked to use both the product being tested and a competing product, and their experiences are compared.

- Focus: Identifying strengths, weaknesses, and areas for improvement relative to competitors.

8. Benchmark Testing:

- Purpose of benchmark testing is to establish a baseline of usability and performance metrics for a product.

- Process: A series of standardized usability tests are conducted on the product to establish a baseline of performance.

- Focus: Setting performance standards and measuring progress over time through repeated testing.

9. Formative Testing:

- Purpose of formative testing is to gather feedback early in the design or development process to inform product improvements.

- Process: Users provide feedback on early prototypes, wireframes, or design concepts.

- Focus: Iterative design improvements based on early user insights.

10. Summative Testing:

- Purpose of summative testing is to assess the overall effectiveness and performance of a product after development is complete.

- Process: Comprehensive testing is conducted to evaluate the product’s adherence to predefined criteria and objectives.

- Focus: Assessing whether the product meets its intended goals and criteria for success.

User Testing Methods

User testing encompasses various methodologies, each designed to uncover specific insights about your product’s usability and user experience. Below is an in-depth exploration of key user testing methods, including their processes, benefits, and ideal use cases.

- Moderated Testing: In moderated testing, a facilitator (moderator) guides participants through a series of predefined tasks while observing their interactions with the product. The moderator can ask questions, probe for insights, and ensure a controlled testing environment.

Use Cases: This method is valuable for in-depth, qualitative insights, especially when you want to understand user thought processes and gather detailed feedback. It’s suitable for identifying usability issues, evaluating prototypes, and testing specific features.

- Unmoderated Testing: Unmoderated testing involves participants independently using the product without a moderator’s presence. Participants follow predefined tasks, and their interactions are recorded using specialized software.

Use Cases: Unmoderated testing is cost-effective and efficient for collecting quantitative data from a larger number of participants. It’s suitable for remote testing, A/B testing, or when a facilitator’s presence is not feasible.

- Thinking-Aloud Testing: In thinking-aloud usability testing, participants vocalize their thoughts, feelings, and reactions as they navigate the product and complete tasks. The goal is to gain insights into users’ cognitive processes.

Use Cases: This method is excellent for understanding user decision-making, uncovering usability issues, and improving user interfaces. It’s particularly useful for testing the intuitiveness of navigation and features.

- Remote Usability Testing: Remote usability testing allows participants to test a product from their own location using screen-sharing or recording software. Researchers provide tasks and guidelines remotely.

Use Cases: Remote usability testing is convenient and cost-effective, making it suitable for gathering insights from a geographically dispersed user base. It’s often used for testing websites, apps, or digital products.

- Card Sorting: Card sorting involves participants organizing content or features into categories or groups based on their mental models. Researchers analyze how users structure information.

Use Cases: This method helps in optimizing information architecture, navigation menus, and content organization. It’s valuable during the early design phase to ensure that the product’s structure aligns with user expectations.

- First Click Testing: First click testing focuses on the first action users take when presented with a specific task or interface element. It helps evaluate the effectiveness of the initial interaction.

Use Cases: This method is useful for assessing the clarity of calls-to-action, menu labels, or navigation paths. It helps ensure that users can find what they’re looking for with minimal effort.

- Heuristic Evaluation: Heuristic evaluation involves usability experts assessing a product against a set of established usability heuristics or principles. They identify potential usability issues based on their expertise.

Use Cases: This method is valuable for identifying usability problems early in the design process and

- Mobile Usability Testing: Mobile usability testing focuses specifically on evaluating the usability and user experience of mobile applications or mobile-responsive websites.

Use Cases: With the increasing use of mobile devices, this type of testing is crucial to ensure that mobile apps and websites are user-friendly and functional on various screen sizes and devices.

- Five-Second Test: In a five-second test, participants are shown a screen or interface for five seconds and then asked questions about what they remember. This method helps assess the clarity of important visual elements and messaging.

Use Cases: It’s useful for testing the impact of first impressions, branding, and the visibility of critical information.

- Preference Testing: Preference testing focuses on gathering user preferences and feedback regarding design elements, features, or options. Participants express their preferences among different design variations.

Use Cases: It helps in making design decisions based on user preferences, such as choosing between multiple interface designs or color schemes.

- Rapid Iterative Testing and Evaluation (RITE): RITE is an iterative approach to usability testing where changes and improvements are made to the product between test sessions. It involves quick cycles of testing and refining.

Use Cases: RITE is beneficial when rapid improvements are required or when addressing critical usability issues during the development process.

Tree testing evaluates the effectiveness of a product’s information architecture and navigation structure by having participants complete tasks that involve finding specific pieces of content or information within a text-based structure.

Use Cases: It helps ensure that users can efficiently locate content or information in the product’s hierarchy.

Learn more: What is a User Journey Map?

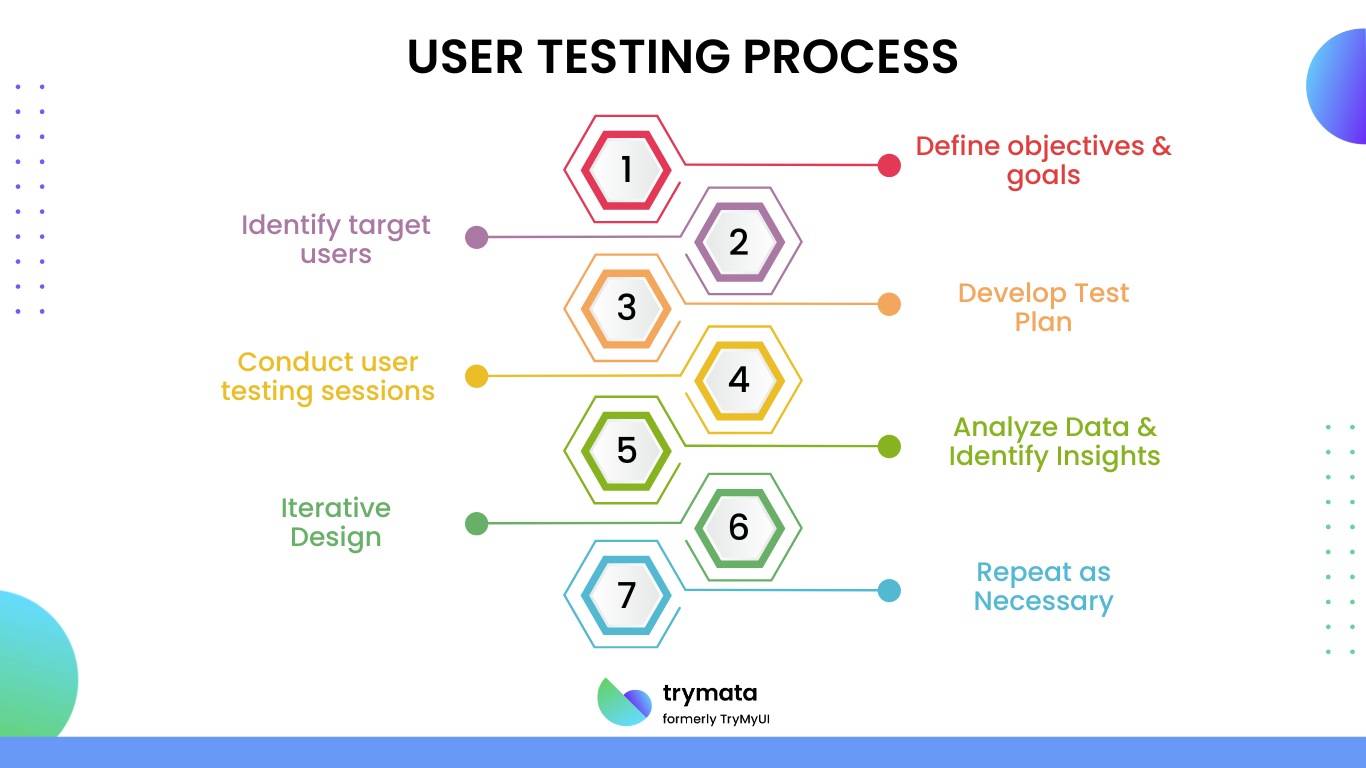

15 User Testing Best Practices for 2025

User testing is a crucial component of the product development process, and following best practices ensures that you gather meaningful insights and make informed decisions. Here are some user testing best practices:

- Define Clear Objectives and Goals: Start by clearly defining the objectives and goals of your user testing. What specific aspects of the product’s usability or user experience are you trying to evaluate or improve? Have a well-defined objectives and testing guides for the entire testing process.

- Recruit Clear Representatives: Select participants who closely match your target audience or user personas. Diversity in age, gender, background, and experience helps ensure a more comprehensive range of user perspectives.

- Create Realistic Scenarios and Tasks: Develop realistic and relevant test scenarios and tasks that mimic how users would naturally interact with the product. Ensure that tasks are clear, unbiased, and representative of common user actions.

- Encourage Natural Behavior: During testing, encourage participants to behave naturally. Emphasize that you are evaluating the product and not assessing their abilities. The goal is to observe how users would typically interact with the product.

- Minimize Bias and Distractions: Create a neutral testing environment that minimizes distractions and reduces bias. Avoid influencing participants with leading questions or suggestions. Let them explore and interact with the product independently.

- Use a Mix of Qualitative and Quantitative Data: Gather both qualitative and quantitative data during usability testing. Qualitative data includes participant observations, comments, and feedback, while quantitative data can involve metrics like task completion times or success rates.

- Record and Analyze Sessions: Record usability testing sessions, including participants’ screen interactions and audio commentary. This allows you to revisit sessions for a deeper analysis and share concrete examples of usability issues with the team.

- Iterate and Prioritize Improvements: Usability testing should be an iterative process. After analyzing the findings, work with the development and design teams to prioritize and address identified usability issues. Implement changes and then retest the product to ensure that improvements have the desired impact.

- Involve Stakeholders: Include stakeholders, such as product managers, designers, and developers, in the testing process or share testing results with them. This helps align the team’s understanding of user needs and priorities.

- Maintain a Positive and Supportive Environment: Create a supportive atmosphere during testing sessions. Participants should feel comfortable sharing their thoughts and concerns without fear of judgment. Facilitators should be empathetic and encouraging.

- Focus on the User’s Perspective: Put yourself in the user’s shoes. When interpreting findings, prioritize what matters most to users rather than personal preferences or assumptions.

- Document and Share Findings: Create a comprehensive usability testing report that summarizes the findings, including both quantitative and qualitative data. Use screenshots, videos, or quotes from participants to illustrate key points. Share this report with the project team and stakeholders.

- Test Early and Often: Incorporate usability testing throughout the product development lifecycle, from early prototypes to final versions. Catching and addressing usability issues early is more cost-effective than fixing them later.

- Measure Task Success and User Satisfaction: In addition to identifying usability issues, track task success rates and user satisfaction levels to gauge the overall user experience.

- Continuous Improvement: After implementing changes based on usability testing, continue to monitor the product’s usability and gather user feedback. Iterate and make ongoing refinements to enhance the user experience.

Learn more: What is End User Optimization?

Elevate Your UX with Trymata’s User Testing Solutions

User testing is the cornerstone of creating intuitive, high-performing digital experiences. By understanding user testing, key types, and proven methods, teams can move beyond assumptions and build products that truly resonate with their audience.

But knowledge alone isn’t enough—you need the right tools to act on insights. That’s where Trymata comes in.

Why Trymata?

- End-to-End Website Testing: From prototypes to live sites, test across desktop, mobile, and apps with real users.

- Actionable Insights: Uncover pain points (where users struggle) and gain points (what delights them) through heatmaps, session recordings, and spoken feedback.

- Trusted by Leaders: Join global brands like WeightWatchers and startups alike in leveraging Trymata’s full-stack usability testing platform to boost engagement, conversions, and growth.

Whether you’re a UX designer, product manager, or marketing team, Trymata empowers you to:

Test with precision—no guesswork.

Identify friction points before they impact revenue.

Unleash your best digital experience with data-backed decisions.

Ready to transform your website’s usability? Explore Trymata’s Website Testing Tools Today