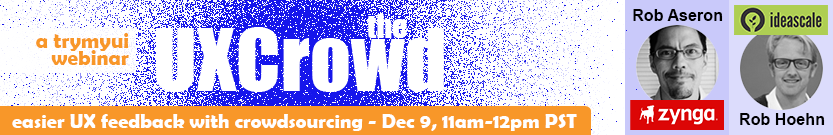

On December 9 we hosted a webinar with Rob Hoehn of Ideascale (an innovation crowdsourcing company) and Rob Aseron, former head of user research at Zynga (a social games provider) diving into the utilities of crowdsourcing and how it can be used to expedite the user research process and improve feedback. Below is the Q&A segment of the webinar.

What are the some of the pitfalls of crowdsourcing?

Rob Hoehn: That’s a great question that tends to come up quite a bit. Obviously there have been some spectacular failures that we’ve seen in crowdsourcing. A lot of it comes down to focus, and a lot of open innovation practitioners bring this up quite often: that the question or the campaign is probably more important than how you run the crowdsourcing campaign or what the ultimate answer is. And that applies to a lot of things of course but it’s particularly important with crowdsourcing, because you have a large crowd and you want to make sure you steer the crowd in the right direction.

The other pretty common pitfall is poor moderation, and that can fall into a lot of categories, of course: obviously filtering out inappropriate behavior or inappropriate language, but also on a larger scale thinking about how you can moderate your crowd and focus them in the right direction. So maybe someone might submit an idea that’s a little bit off topic but still kind of in the same direction – we definitely want to get in there and moderate that and talk to that person or that group of people and steer them in the direction of the question.

You spoke about changing up the demographics of your crowd; wouldn’t modifying the demographics take away from the diversity of opinions that you’re trying to get from it?

Rob Hoehn: A lot of times that depends on the type of crowdsourcing campaign you’re running, and as I mentioned, usually when we run an initial campaign it would be with a larger audience – imagine it like a funnel – and then we winnow down the opinions and focus more on the subject matter experts.

So it’s not that we’re not listening to the larger crowd, it’s just that we would focus down to a smaller crowd in a later stage.

What are the pitfalls of trusting the crowd? How might using the crowd in a user feedback context way impede your research process?

Rob Aseron: There can be downsides. You can get focused on the wrong issues. But by and large, particularly in a closed beta, you’ve really got a firm design by this point. If you were using it for ideation I’d be a little more worried that early on you could get off onto the wrong track, but by this point you know the different experiences that you’re providing in your product and there’s really a lot less of a chance to get on the wrong track, which is the main thing I’d be concerned about – forking off into something that’s not a profitable direction.

Using the crowd, I think, in this context is unlikely to have that problem.

What are your tips for crowdsourced usability of mobile experiences, both for mobile web and native apps?

Rit Gautam: Last month, we released our mobile testing beta, so we will be getting video and audio recordings of users’ mobile screens as they go through your user flow. Once that is all in place, we will basically be able to extend this UXCrowd feature to those tests as well. So it doesn’t really matter what form a video comes in as long as we have access to the users and can run them through the same crowdsourcing process and get the same feedback.

Rob Aseron: My biggest tip is to, whenever possible, tie your existing data collection through this tool, or whatever tool you use, to behavioral data whenever you can. There are many datastreams and you want to use multiple data streams and make sure that you are spending effort trying to make sense of what the different datastreams tell you so you’re getting one story from them.

What, in your experience, is the most difficult part of getting feedback from a beta?

Rob Aseron: There tends to be lots of front-end preparation issues getting the beta closed in a way that’s controlled. It’s all about preparation. Once you have everyone in the beta – in my experience it’s gone pretty smoothly. But doing all your work up front to make sure everyone can access everything is usually the kind of thing you test over and over again to make sure everything is solid.

When you’re crowdsourcing ideation, and expecting people to vote on each others’ ideas, how genuine is that expectation and how many times do people really read ideas before voting on the best ones versus just voting on the first few they see and moving on? Do you account for that in any way?

Rob Hoehn: Yeah, that’s actually a really great question. I was actually just part of a discussion yesterday on a design panel about what numbers we put next to people’s activities, and for instance next to our profiles we have a leaderboard and people are encouraged to vote on ideas, submit comments, et cetera but you’ll notice one metric that’s missing from that is, “Did you actually read any of the ideas?” And it’s funny because you’ll notice that the opposite behavior – encouraging people to respond and not to listen – is really common on the internet if you look at any comment board; people are encouraged to leave a comment but we don’t really even know if the commenter actually read the article or not. And I think it’s a big problem on the internet that we need to solve.

We started to take some steps about a year ago implementing a feature whereby you were required to read a certain number of ideas before you could actually vote or comment or participate in any of our communities but I think we need to take it a step further and actually have a metric and a quantitative element around that in our leaderboard to encourage people to actually read the ideas or any type of feedback we’ve gotten in an IdeaScale community. That’s actually an idea that we’re working on, and I don’t think we have all the solutions yet but we’ll get there.

When you have a set of ideas from the UXCrowd, won’t some people have the same idea? Why would they vote up someone else’s response if it’s the same as theirs, and would they purposely downvote similar opinions? What do you do in that case, or how do you collate similar responses from different users?

One of the functionalities I see us implementing in UXCrowd is the ability for you as the customer to not only look at these responses but essentially also to start collating them, if there’s a response that someone gave that’s common and five other responses have the same idea, allowing you to club them together and kind of put them under the same umbrella and aggregate all the votes. And that way you can hone in on what are, say, the 3 or 4 big themes that you pick out of this huge sea of responses and ideas. So that is something we’ll be working on putting together.

Watch the recording of the webinar