Creating products, applications and websites, every day becomes a challenge where different methodologies must coexist to give an optimal result, within all these, evaluative research has emerged as a crucial tool for assessing program effectiveness and guiding strategic improvements. This systematic approach to measuring outcomes and impact helps organizations understand what works, what doesn’t, and why.

By combining rigorous methodology with practical insights, evaluative research bridges the gap between theoretical frameworks and real-world implementation, enabling institutions to optimize their interventions and demonstrate value to stakeholders.

As resources become increasingly constrained and accountability demands grow, mastering the art and science of evaluative research has become essential for program managers, policy makers, and organizational leaders alike. If you want to learn more about this methodology, you have come to the right article. Keep reading to discover the benefits and how to apply this methodology to your projects.

What is Evaluative Research?

Evaluative research is defined as a research method that assesses the design, implementation, and outcomes of programs, policies, or products.

Its primary purpose is to determine the effectiveness, efficiency, and relevance of these entities in achieving their intended goals. By collecting and analyzing data, evaluative research helps stakeholders understand whether a program or intervention is functioning as intended and where improvements can be made.

This form of research is essential in various fields, including education, healthcare, social services, and business, as it provides evidence-based insights that inform decision-making and policy development.

In evaluative research, the focus is often on measuring outcomes against predefined criteria or standards using quantitative and qualitative methods for data collection.

Quantitative methods might include surveys, standardized tests, or statistical analysis, which provide numerical data on program outcomes.

Qualitative methods, such as interviews, focus groups, and observations, offer deeper insights into the experiences and perspectives of participants. Combining these methods allows researchers to present a holistic view of a program’s impact, identifying both strengths and areas needing improvement.

An essential aspect of evaluative research is its cyclical nature. It is not a one-time activity but an ongoing process that requires continual assessment and adjustment. This iterative process involves setting clear objectives, collecting and analyzing data, interpreting the findings, and making recommendations for improvement. By continuously evaluating and refining programs, organizations can ensure they remain effective and responsive to the needs of their target populations. This iterative approach also promotes accountability and transparency, as it provides a documented trail of evidence supporting the program’s performance and adjustments over time.

For example, consider a public health intervention aimed at reducing childhood obesity rates in a community. An evaluative research study on this intervention would begin by defining specific, measurable objectives, such as a 10% reduction in obesity rates over two years. Researchers would then collect baseline data on the current obesity rates, dietary habits, and physical activity levels of children in the community. Throughout the intervention, they would gather data at multiple points to assess progress.

Quantitative data might include BMI measurements and surveys on dietary intake, while qualitative data could involve interviews with parents and teachers about their perceptions of the program’s effectiveness. By analyzing this data, researchers can determine if the intervention is on track to meet its goals, identify any barriers to success, and make informed recommendations for adjustments to improve outcomes.

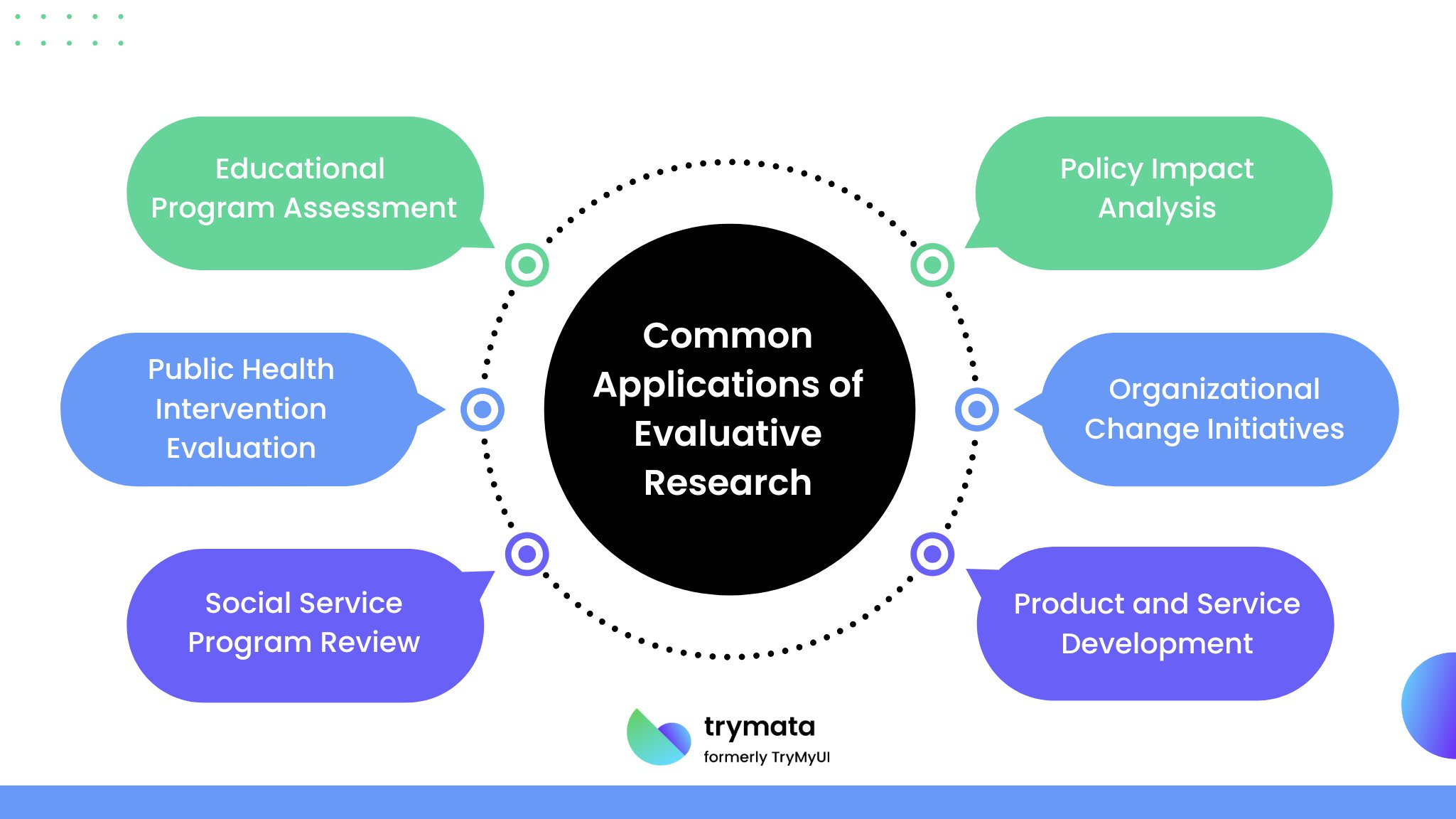

Common applications include:

- Educational program assessment

- Public health intervention evaluation

- Social service program review

- Policy impact analysis

- Organizational change initiatives

- Product and service development

Key Characteristics of Evaluative Research

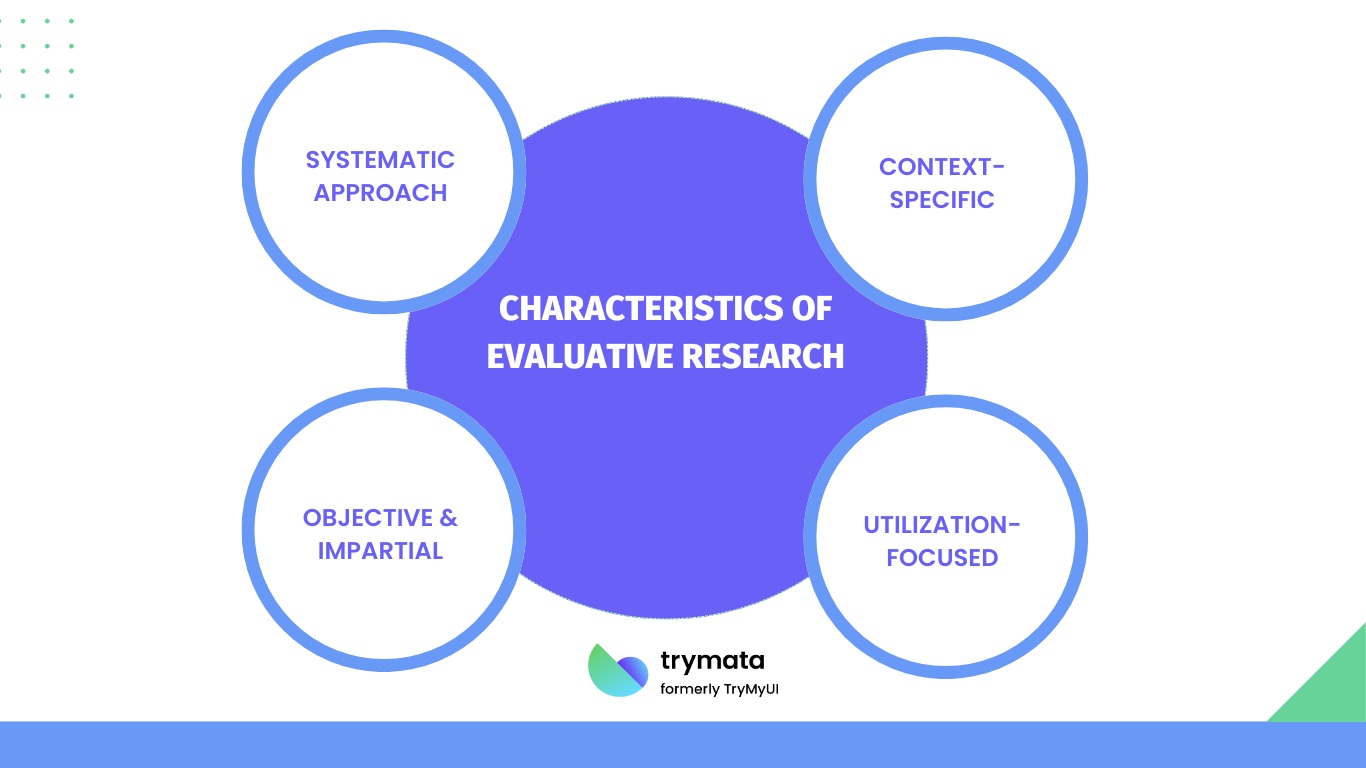

Evaluative research has several key characteristics that distinguish it from other types of research. These characteristics ensure that the research is systematic and objective and provides actionable insights. Here are the primary characteristics of evaluative research:

1. Systematic Approach

This systematic process involves clearly defined steps, including setting objectives, selecting appropriate methods, collecting data, analyzing results, and reporting findings.

2. Objective and Impartial

Objectivity is a cornerstone of evaluative research. The researchers strive to remain neutral and impartial, ensuring that their findings are not influenced by personal biases or external pressures. This objectivity is critical for the credibility of the research, as stakeholders need to trust that the conclusions drawn are based on evidence rather than preconceived notions or agendas.

3. Context-Specific

Evaluative research is tailored to the specific context in which the program, policy, or product is implemented. This means that the evaluation considers the unique characteristics, needs, and conditions of the environment and population being studied. Context-specific evaluations provide more relevant and actionable insights, as they take into account the particular circumstances that might affect the outcomes.

4. Utilization-Focused

The ultimate goal of evaluative research is to provide useful information that can inform decision-making and improve programs or policies. Therefore, it is utilization-focused, meaning that the research is designed with the end-users in mind. This involves engaging stakeholders throughout the process, from setting evaluation objectives to interpreting findings and implementing recommendations. By focusing on utility, evaluative research ensures that the results are practical and directly applicable to improving the subject of the study.

Evaluative Research Method: Key Stages with Examples

Evaluative research involves several key stages that ensure the systematic and objective assessment of programs, policies, or products. Here are the primary stages along with examples to illustrate each step:

- Planning and Design

In this first stage, researchers define the objectives of the evaluative research. They also determine the criteria for success and select the appropriate methodology.

Example: A nonprofit organization wants to evaluate the effectiveness of its after-school tutoring program. The objective here is to determine if the program improves students’ academic performance.

- Data Collection

Researchers gather quantitative and qualitative data relevant to the evaluation objectives. This can involve surveys, interviews, observations, and the collection of existing records or data sets.

Example: For the after-school tutoring program, researchers might collect data through pre- and post-program academic tests, surveys of students and parents about their satisfaction with the program, and interviews with teachers about observed changes in student performance.

- Data Analysis

In this stage, researchers analyze the collected data to identify patterns, trends, and relationships. They use statistical methods for quantitative data and thematic analysis for qualitative data.

Example: Researchers analyze the test scores to see if there is a statistically significant improvement in students’ grades. They also review survey responses to understand participants’ perceptions and conduct thematic analysis on interview transcripts to identify common themes regarding the program’s impact.

- Interpretation

Researchers interpret the results in the context of the evaluation objectives and criteria for success. They consider whether the program, policy, or product met its goals and what factors contributed to or hindered its effectiveness.

Example: The analysis shows a significant improvement in math scores but no change in reading scores. Surveys and interviews reveal that students found the math tutoring sessions more engaging and aligned with their curriculum, while the reading sessions were less structured.

- Reporting and Dissemination

The findings are formed into a research report that includes the final results and recommendations based on the findings.

Example: The final report for the after-school tutoring program includes detailed findings on the academic performance improvements in math, the lack of change in reading, and participants’ feedback. Recommendations might include revising the reading curriculum to make it more engaging and structured.

- Action and Follow-Up

Based on the evaluation findings, stakeholders take action to improve or adjust the program, policy, or product. Follow-up evaluations may be conducted to assess the impact of these changes.

Example: The nonprofit organization decides to overhaul the reading tutoring curriculum based on the recommendations. They also plan a follow-up evaluation after the revised program has been implemented to assess its effectiveness.

Best Practices for Conducting Evaluative Research in 2025

Evaluative research in 2024 benefits from technological advancements, evolving methodologies, and an increased emphasis on ethical considerations and stakeholder involvement.

Here are the key best practices for conducting evaluative research in 2024:

1. Incorporate Technology and Data Analytics

Leverage advanced data analytics, machine learning, and AI to enhance data collection, analysis, and interpretation. Utilize tools such as real-time data dashboards, automated data processing, and predictive analytics to gain deeper insights and improve decision-making.

2. Ensure Ethical Standards and Data Privacy

Obtain informed consent, ensure confidentiality, and comply with regulations like GDPR.

3. Engage Stakeholders Throughout the Process

Involve stakeholders from the planning stage to the dissemination of results. This includes program beneficiaries, implementers, funders, and policymakers. Their input can help shape the evaluation’s focus and ensure the findings are relevant and actionable.

4. Use Mixed Methods Approaches

Combine quantitative and qualitative methods to provide a comprehensive view of the program or policy being evaluated. Quantitative data offers measurable evidence of outcomes, while qualitative data provides context and depth to understand the why and how behind those outcomes.

5. Focus on Context-Specific Evaluations

Tailor the evaluation to the specific context of the program or policy. Consider cultural, economic, and social factors that may influence the outcomes and relevance of the evaluation.

6. Promote Transparency and Accountability

Maintain transparency in the evaluation process by clearly documenting methods, data sources, and analytical techniques. Share both positive and negative findings to provide a balanced view of the program’s effectiveness.

7. Iterative and Adaptive Evaluation Designs

Use iterative and adaptive approaches that allow for ongoing adjustments based on interim findings. This can help in responding to emerging issues and improving the program in real-time.

8. Capacity Building and Training

Invest in capacity building and training for evaluators and stakeholders. Ensure they have the skills and knowledge needed to conduct rigorous evaluations and interpret findings accurately.

9. Longitudinal and Continuous Evaluation

Consider longitudinal and continuous evaluation approaches to track the long-term impacts of programs and policies. This provides insights into sustainability and long-term effectiveness.

10. Integrate Sustainability and Scalability

Evaluate not only the immediate impact but also the sustainability and scalability of programs and policies. Assess how they can be maintained and expanded over time to benefit a larger population.