Not sure how to go about writing the tasks for your usability test? Then you’re already on the right track: you recognize the importance of getting your tasks right.

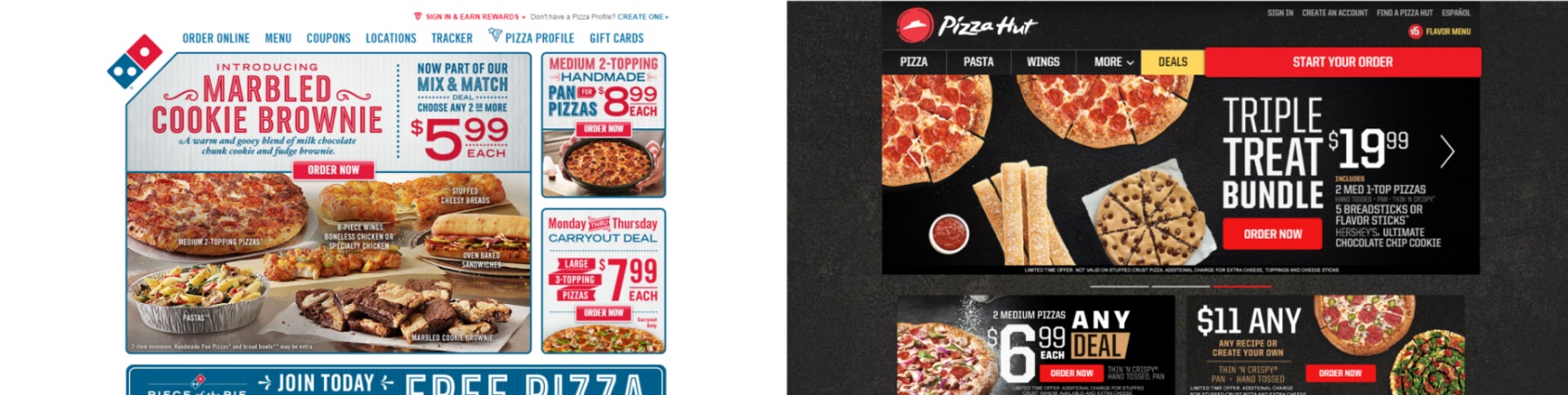

A poorly written test script probably won’t get you the data you need, and may even produce results that are misleading or inaccurate. Below are the scenario and tasks we used for the UX Wars: Domino’s vs Pizza Hut usability tests, with commentary on why each was included and how we chose the wording.

Scenario

You’re having a late night in with a few friends and people are starting to get hungry, so you decide to order a couple of pizzas for delivery.

Our demographic for this test was people under 28 years old – the age bracket we figured was most likely to go online to order a couple of cheap, greasy pizzas. For good measure, we added an extra requirement: Must like pizza.

We tried to pick a scenario that was general enough to be relatable to many people, but specific enough to evoke familiar memories users could furnish their test experience with. A “late night in with a few friends” could be many things – video games, board games, movie night, even studying or a work brainstorm.

Most people can think of a time that fits that description (they might have even ordered pizza then), and that helps them to contextualize the test and add detail and realism to the experience.

We saw users do exactly this in the results. “I’ll add Italian sausage. That’s not really my favorite topping, but my friends like that.”

Task 1

Have you ordered pizza online before? Which website(s) did you use?

This task was included to see what previous experience users had that might affect their performance. Someone who had used the site before would be more likely to know how it works, where to find things, and how to avoid certain potholes.

On the other hand, someone who had used a competitor’s website would have more difficulty because they already have expectations for how such a site will look and work. For example, they may have trouble with an element only because it differs from what they used before.

Knowing this information helps us to account for users’ biases and understand varying responses to the same elements.

We sometimes include a requirement that users must not have used the target website before, but chose not to for this test since we were specifically interested in comparing what users liked across different sites.

Task 2

Does this site have any deals you can take advantage of for your order?

Domino’s and Pizza Hut both feature deals heavily on their home pages, suggesting this is a primary pathway for visitors ordering through the two websites. Therefore we included this task to reflect a typical user journey for visitors to the sites, and also because saving money with a deal seemed a likely priority among our young tester pool.

Notice, however, that this task was phrased as a question rather than an instruction – in other words, there is a “No” option. If we had written, “Choose a deal to use for your order,” the task would not have accounted for the possibility that none of the deals matched what the user was looking for.

Most users did pick a deal to apply to their order, but others chose not to, particularly on Domino’s where they didn’t see an offer for multiple pizzas. Ultimately, leaving this task open-ended allowed the experience to unfold more naturally.

Task 3

Customize your pizzas with the toppings, sauce, and crust options you would like.

This task comprised the meat of the test: building the pizza. We made sure to write the plural “pizzas” to remind users that the scenario called for a “couple of pizzas,” and because we preferred to see them go through the flow more than once. This way users would be more likely to explore different customization options, and we would also be able to see how well they picked up on the ins and outs of the system after one time through.

Task 4

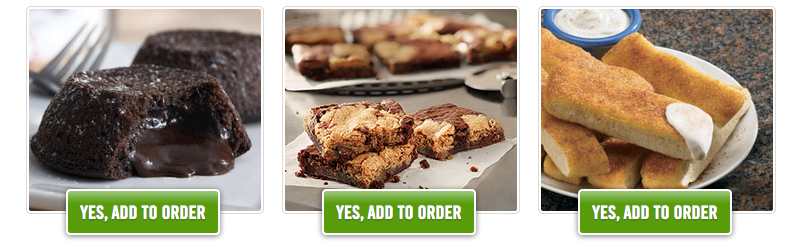

Finalize your order with any other items you want.

Both sites offer (and heavily promote) a number of other items including sides, drinks, and desserts; we wrote this task to give users a chance to add these things to their order.

Notice that there is leeway, given the wording of the task, for a user to say “Nah, I’m good” and move on. However, since some users would want to add these items, and especially since some deals expressly included additional items, we decided to include this task in between the previous one and the checkout step.

Task 5

Go through the checkout until you are asked to enter billing information.

This task is a pretty straightforward instruction. We made sure to ask our testers to stop once they reached the billing form, since they wouldn’t be completing the order for this exercise.

Task 6

Please go to [competitor’s website] and go through the pizza ordering process there too. Compare your experience as you go.

We typically don’t have the same users go to both sites when we do comparative usability testing, for several reasons:

- The first site they use inevitably colors their experience on the second

- Sending users through two flows tends to produce high tester fatigue and reduce the quality of the results

- Using two separate tester pools yields a more reliable comparison between quantitative metrics

However, after running a few tests on each site with just Tasks 1-5, we found that they ran shorter than we expected, and since the logic of each site is pretty basic, users were being relatively lenient.

We decided that sending them to both sites sequentially would be the best path, as it would force users to make comparisons and critique the very different treatments of Domino’s and Pizza Hut. We judged that tester fatigue would not be unacceptably high given the shorter time duration and simple learning curve.

We had half of the testers start on Domino’s and then go to Pizza Hut, while the other half used the sites in the opposite order.

Task 7

Which site was easier to use, and why? Which would you use next time you order pizza online?

As researchers, we have more biases than we realize. You can watch through all the user videos, make a list of all the problems from both websites, yet still come to a different conclusion than your testers because you perceived and weighted issues differently.

Something that seems important to you may not have been all that important to the users; something that bugged them earlier in the test may be forgotten (or forgiven) by the end (always remember, though, that in the ‘real world’ those users may never have gotten to ‘the end’).

We ended with this task to get a clear answer from the users without letting our own judgment cloud the final verdict. The question is the most important one by which to judge successful design: which site would you come back to?

This information helped us to see what mattered most to users overall and which website’s UX was preferable for more users.

Recap: Full task script

Scenario – You’re having a late night in with a few friends and people are starting to get hungry, so you decide to order a couple of pizzas for delivery.

1. Have you ordered pizza online before? Which website(s) did you use?

2. Does this site have any deals you can take advantage of for your order?

3. Customize your pizzas with the toppings, sauce, and crust options you would like.

4. Finalize your order with any other items you want.

5. Go through the checkout until you are asked to enter billing information.

6. Please go to [competitor’s website] and go through the pizza ordering process there too. Compare your experience as you go.

7. Which site was easier to use, and why? Which would you use next time you order pizza online?

Final notes

The main thing to remember is that writing good usability testing tasks comes down to communication. Your tasks have to make sense to the average user. Common language that is accessible and easy to digest is superior to very technical, stiff writing. Don’t talk like a researcher; be clear and straightforward.

It’s important not to veer to the other end of the spectrum too, though: don’t try to have personality or be ‘fun’ when you write your tasks. You don’t want your testers to like you. Ideally, they should forget that you exist – one of the advantages of unmoderated usability testing is that it eliminates the human connection between researcher and participant that skews the results. Don’t squander that advantage.

Read more: 5 tips for designing a great usability test

The great thing is, if your tasks aren’t perfect the first time, you can always make adjustments and improve your test script for the next batch of tests. Every time you test, you don’t just learn about your website – you learn how to do better research, too.