There is an art to watching user testing videos. Plenty of insight, of course, is available right at the surface level. But beneath the surface is much, much more.

For example – you might hear a user say during their test video that they want a certain feature, or that they dislike a certain interaction or layout. You may see them click on the wrong button or link. All of that is useful information.

Look deeper, though, and you may find complexities that that user didn’t express out loud.

When a user describes a feature they want added, they’re really talking about a problem they want solved. The actual best solution for that problem may be the feature idea they asked for, or it may be something else completely that solves it better.

Sometimes, a user might confidently state that they’ve completed a task, but when you watch what happened on their screen, you see that they’ve misunderstood what they achieved on your site. They think they’ve finished one thing, but actually ended up doing something entirely different.

Not everything in a user test is true at the surface level – but there is always a truth to be found in it.

Emotion recognition: Adding a new dimension to user testing data

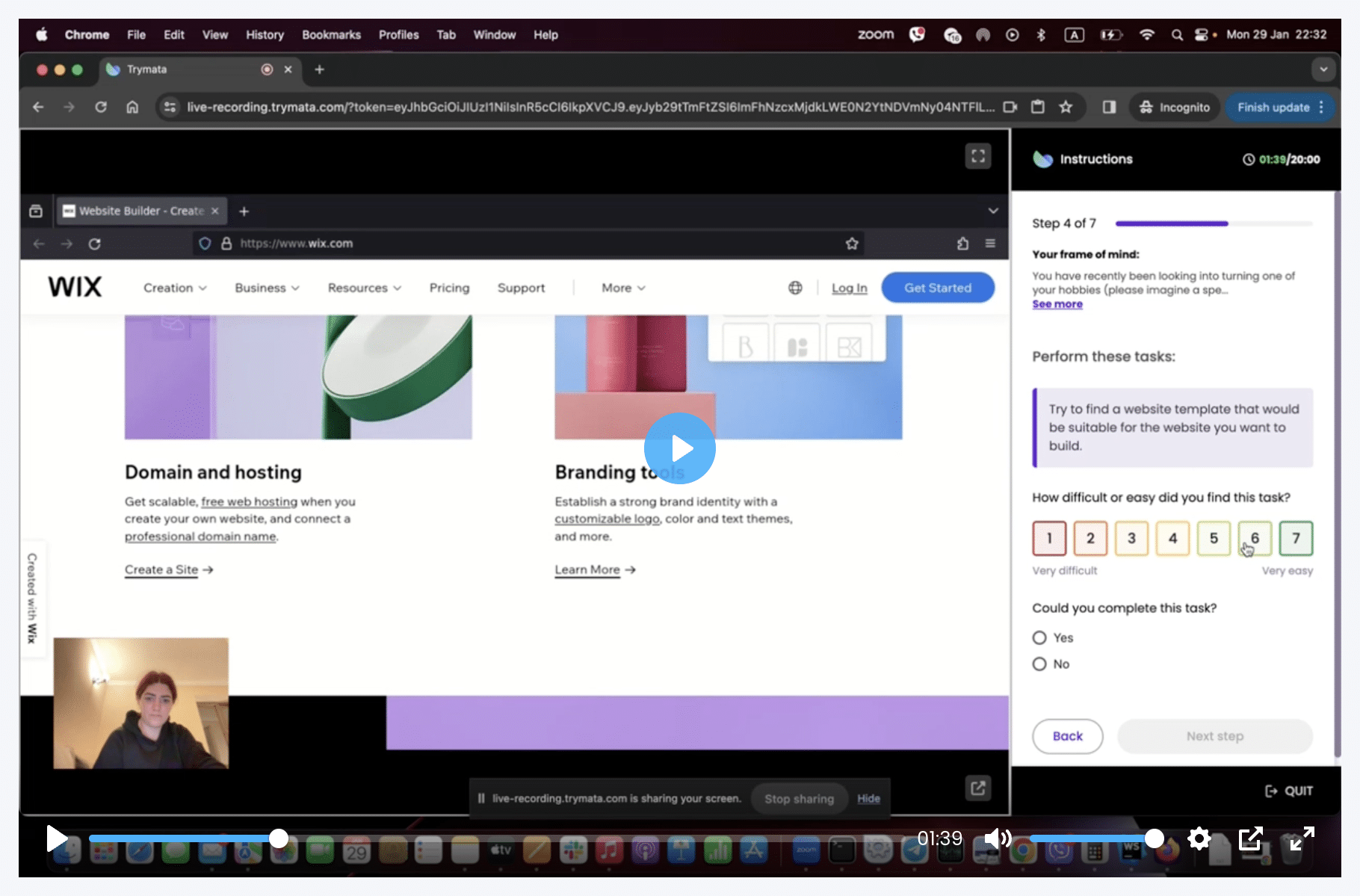

Most remote user testing platforms (like us at Trymata) will offer you screen-recorded video with user-narrated audio. Above all else, the user’s screen and voice are the core pieces of data required to begin forming an understanding of your user experience.

Getting beyond the basics, face camera video is another piece of data that many UX pros love. Why? Because it helps you go beyond the surface-level of analysis.

Watching the user’s face can reveal things that their words and screen may not express. When they click on the wrong button, does their face show irritation? Or do they look pleasantly surprised to discover an option they didn’t even know was there? Perhaps their facial expression remains neutral because they haven’t even realized they took the wrong path.

Adding this extra dimension to your user testing results enables you to enhance your skill in the art of user testing analysis, combining multiple pieces of data to reach closer to a true understanding of your UX.

Face cam video is now available with Trymata user tests

At Trymata, we’ve always offered not just screen and voice data, but a wide variety of qualitative and quantitative tools for getting better UX insights.

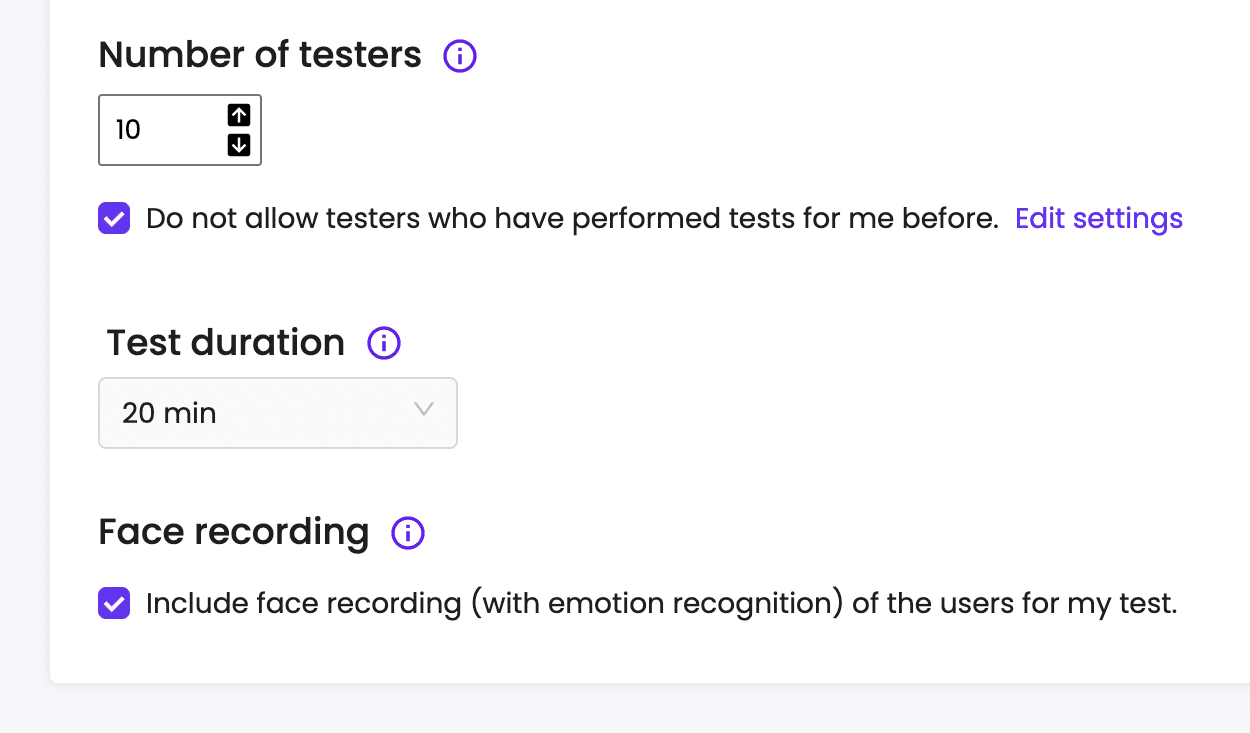

Now, we’re adding face cam video to that suite of tools! If you turn on the new face camera feature on Step 1 of your user test setup, you’ll get every user’s face recording, along with their screen and voice.

We didn’t just add face camera though – while we were at it, we went a lot further…

Automatic A.I.-powered emotion recognition

With face cam video newly available, we decided to harness the power of A.I. to make the most of it! Before you watch even 1 minute of your test videos, you’ll be able to see a chart of every user’s emotions throughout their video session.

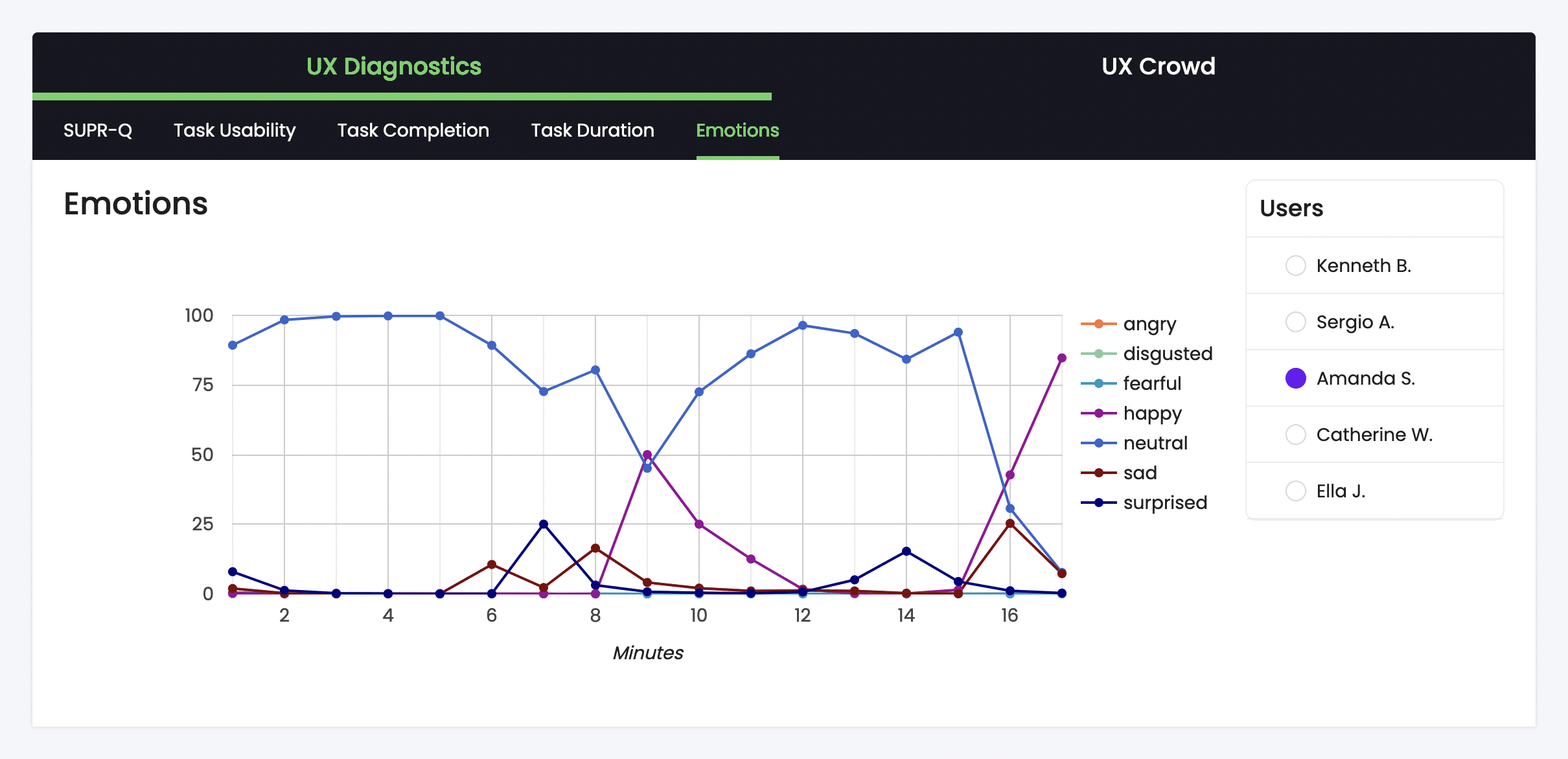

When your videos come in, our emotion recognition model analyzes the users’ facial expressions based on 6 different emotions: anger, disgust, fear, happiness, sadness, and surprise (as well as a 7th “neutral” emotional state).

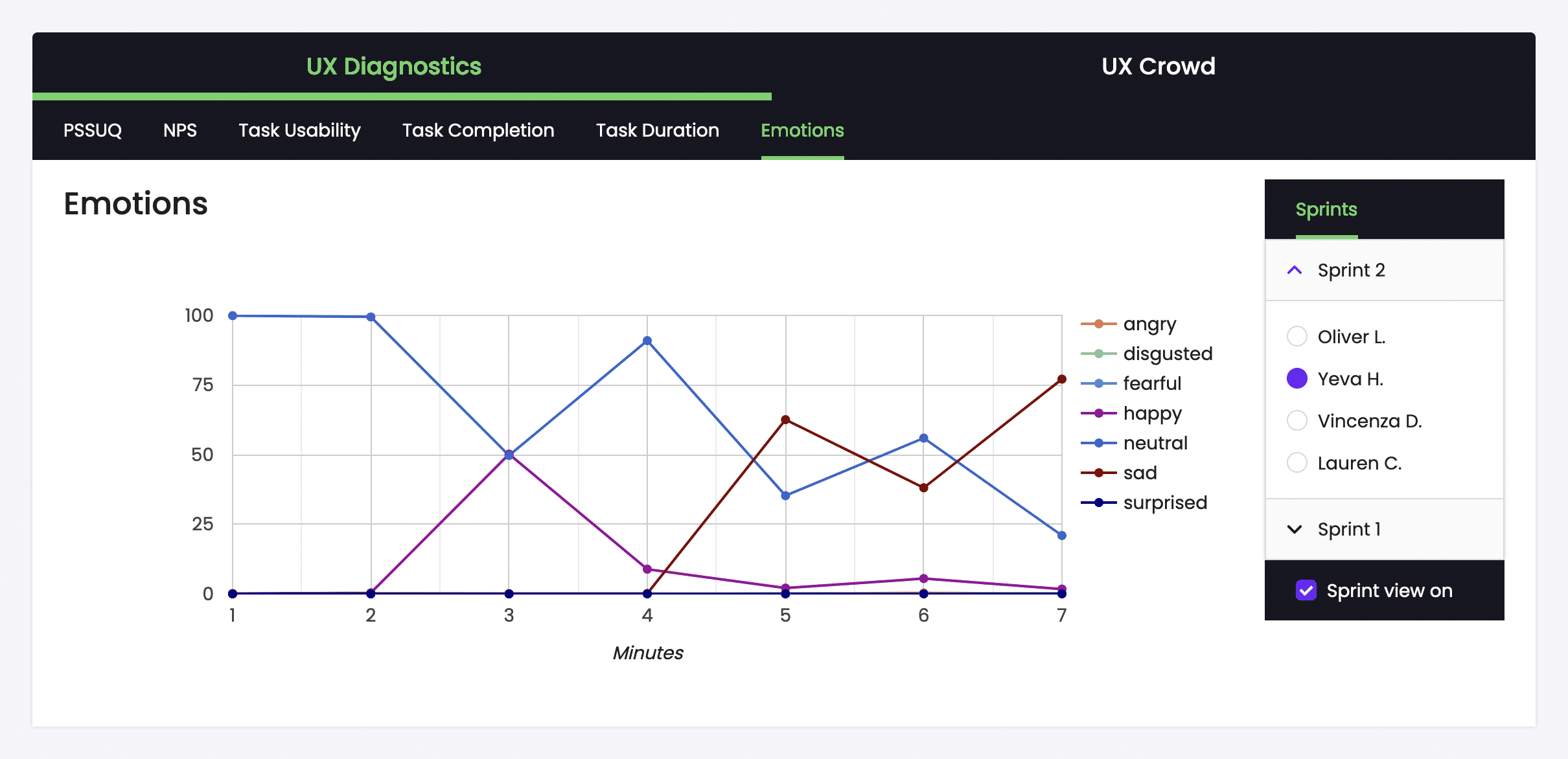

Every moment of each video is assigned an emotional value based on this model. Then, all of that emotional data is aggregated into a line graph, like the one above. Your emotions graphs (found in the UX Diagnostics section of your test results) show what each user was feeling during each 1-minute block of their video session.

For example, in the graph above, we can see that this tester started off feeling completely neutral in the first 2 minutes of her video, before seeing a spike in happiness in the 3rd minute. This spike mostly has trailed off by the 4th minute; then, in the final 3 minutes, she instead expresses a significant (and rising) amount of sadness.

Already, we have a solid foundational understanding of the emotions this user went through while performing their test. Now, when we open their video, we can cross-reference some of these insights with their video index to understand which emotions coincided with which tasks, or pieces of the user flow.

Applying emotion recognition data for better user insights

There are a few ways you can put this additional layer of information to use.

You could use it, for example, to pick out which segments of video to watch. Imagine you’ve just gotten your test results back, and when you look through your emotion recognition graphs, one specific tester displays significant negative emotions during their session. Watching those parts of their video might be a good place to start, to see where things are going wrong with your UX.

On a larger scale, though, emotion recognition data can and should be used as another dimension for understanding the totality of your user testing results – not just picking out specific video clips. Besides the moments specifically associated with negative emotions, any piece of video can be deepened by cross-referencing it with user emotional information.

Consider the extra insight to be gained by comparing anything a user says (perhaps making use of our video transcription feature) to what their face tells you. Stating that they like some aspect of your product or platform, while their face remains neutral, has a different meaning than saying the same while their face shows happiness – or surprise.

Not all test participants have equal ability to verbally express their inner thoughts and emotions, either. Some users excel at it; consequently these users’ opinions can sometimes be weighted too heavily during research, just because they are the most easily understood. Taking into account the unspoken information from face cam data can help to even out this bias, giving us greater insight into the experience of those users who aren’t as verbally forthcoming.

How to start using Trymata’s face camera & emotion recognition features

User face camera and emotion recognition data are available at our highest user testing tier, the Enterprise Plan.

Get in touch with our team to chat about doing your user testing research with Trymata:

Schedule a call >

You can also try out all Enterprise-level features completely free on our 2-week trial! Sign up here to get started >