What’s the most difficult thing about doing usability testing? For many, it’s finding the time to analyze the results. Video data is time-consuming to watch and analyze, and scaling up is even harder.

This challenge is why we at Trymata created the UX Crowd feature in the first place, more than a decade ago. Now, after a hiatus and an overhaul, it’s available again to help our customers get UX insights faster.

If you’re familiar with this feature already, the basic functionality is unchanged from before. However, you’ll now see a refreshed UI, as well as improvements to the tester flow that will improve the quality and consistency of results compared to prior iterations.

What is the UX Crowd?

The UX Crowd is an original feature that’s exclusive to the Trymata platform. It was developed based on crowdsourcing principles, harnessing the power of “the crowd” to serve up key qualitative insights for medium-to-large-scale user UX studies.

With the UX Crowd, you can get a quick window into the major takeaways from your test data before even starting to watch your videos.

How do we facilitate this? It’s a 2-step process.

1). After completing their test videos, the first 5 participants in the test write short answers describing the biggest positives and negatives of their experience, as well as suggestions for improvement.

2). After that, every additional tester who completes a test video will vote on the answers that have already been submitted.

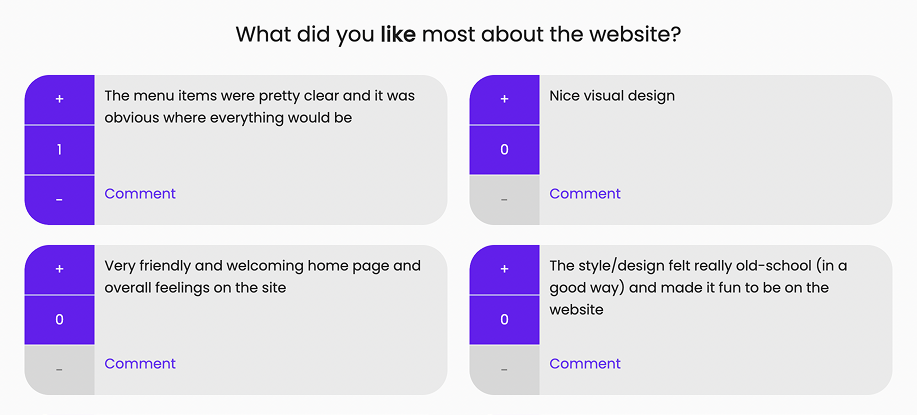

For each answer category (e.g. the “positives”) the voters will have 3 votes to cast among all the submitted responses. They can disperse these votes however they want – so for example, if there are 3 separate answers that they agree with, they might assign 1 vote to each; or, if there is 1 answer that they feel very strongly about, they might pile all 3 into that one item.

Optionally, the voters may also leave their own comments and thoughts on any of the submitted answers – including ones they did or did not vote for.

At the end of this process, there is now an aggregated list of vote-ranked feedback items and commentary, compiled and sorted by the users themselves, and ready for the researcher’s eyes.

Understanding your UX Crowd results

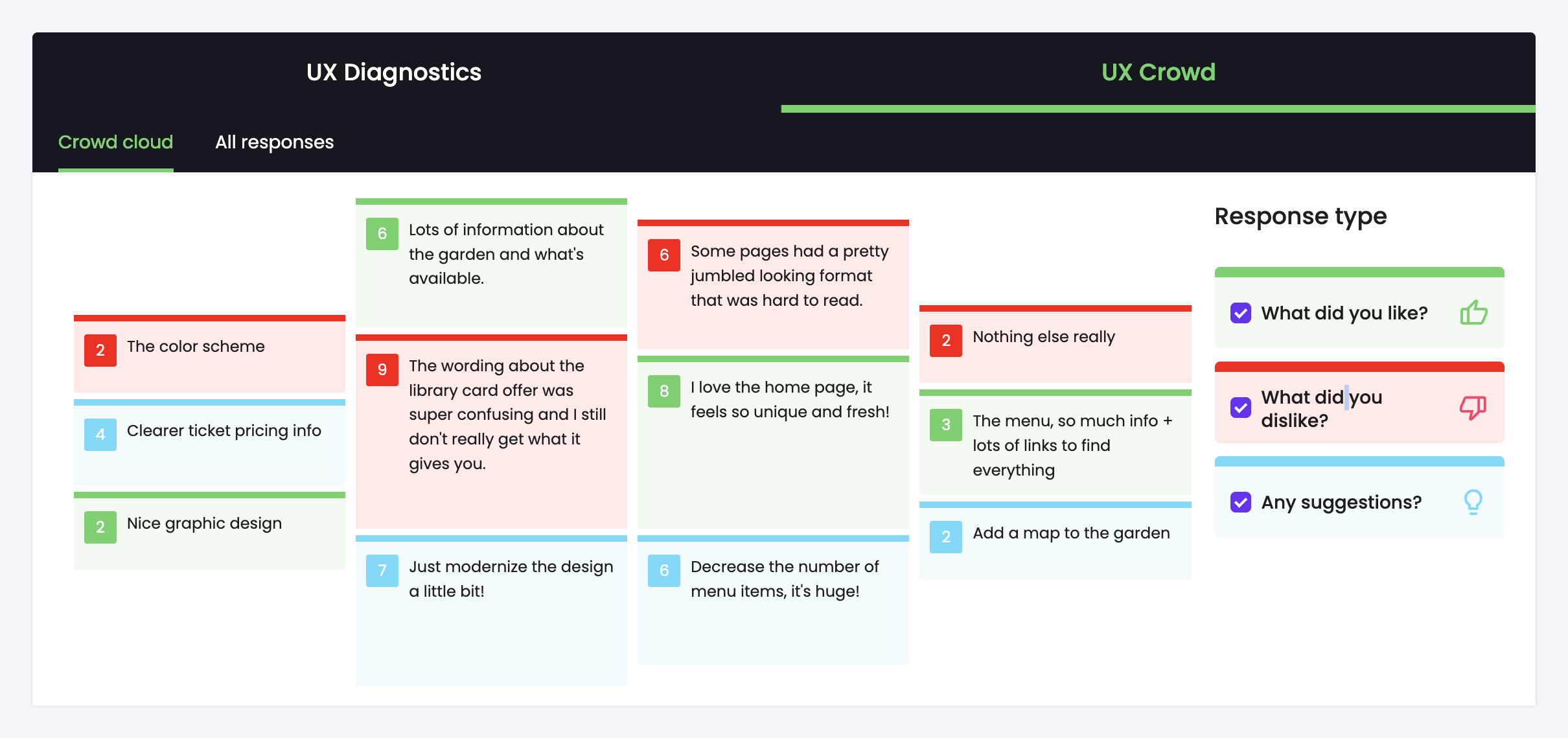

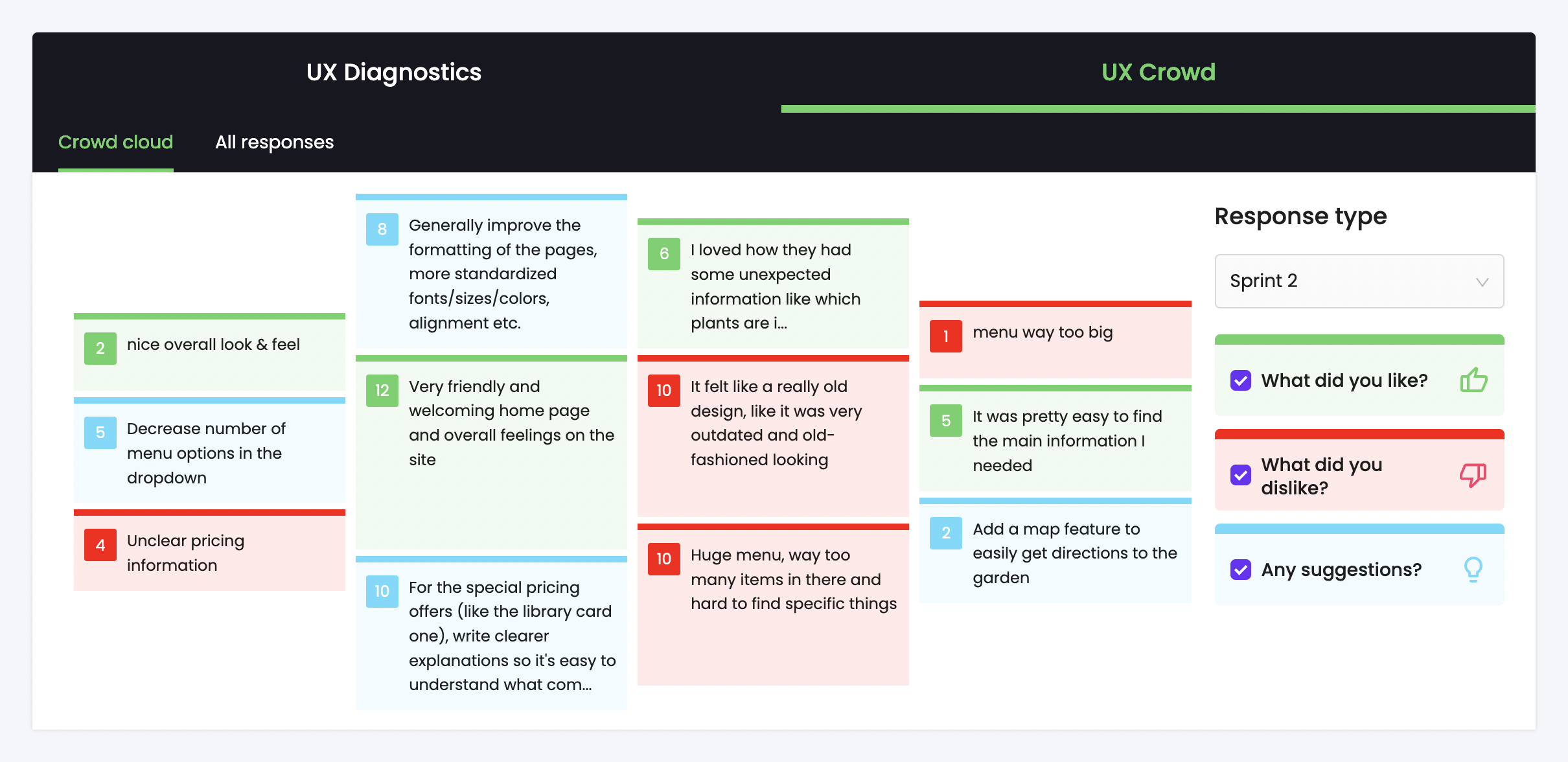

Once all your test results have come in, your UX Crowd section will show you all the top answers, ranked by vote totals and sortable by category.

By looking at our cloud view or list view, you can instantly find out the most significant positive and negative aspects of the user experience as judged by your test participants in aggregate.

The “Crowd cloud” vs “All responses”

These two views both show the same data, but in different styles.

In the cloud view, top-voted answers are displayed in color-coded boxes, sized to represent the number of votes received. The bigger the box, the more important the answer – it’s an easy way to see what matters.

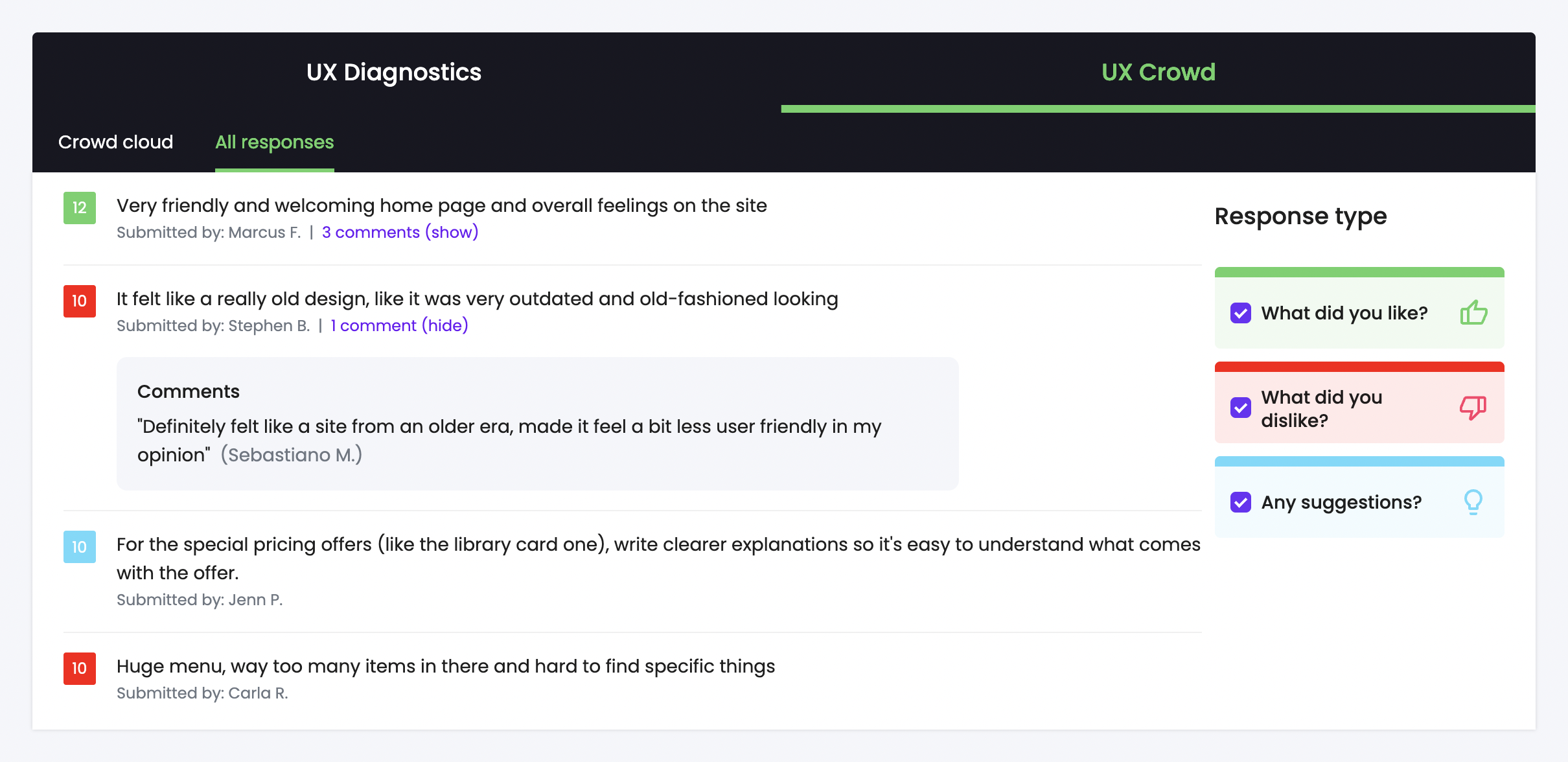

In the list view, all answers are displayed in order with the highest voted items at the top. This is the detailed option, where you can see everything that’s been collected – including comments.

What can you learn from the UX Crowd?

When your user test results come in, you’ll have a lot of video data to watch. Before you dive in, checking your UX Crowd responses will give you a quick pulse on what you’ll find.

The top-voted answers from each category will tell you:

- What key aspects of the experience users liked and disliked

- Which users have strong feelings on key items

- You can see who submitted each answer, plus anyone who left comments expressing their own opinions on it

- Level of agreement or urgency on top items

- For example, a high concentration of votes in one answer demands more rapid action than a more even spread of votes where no one item sticks out

- Which bits of feedback are less important or lacking in agreement

- Items with very few votes can be noted and deprioritized, making it easier to eliminate noisy datapoints

- What ideas users had for improving the experience

- User suggestions are not a blueprint for your product’s roadmap, but they are often worth considering, and can help inspire new ideas and angles

Questions about the UX Crowd

Still wondering about how exactly the UX Crowd feature does what it does? Here are the more advanced explanations for some common questions.

Why do I need to order 10 testers to get the UX Crowd?

In a test with UX Crowd included, the first 5 participants to finish write the answers, and then the remainder vote. Without a minimum number of people to cast votes, there won’t be sufficient data to meaningfully rank which issues were more or less important.

With a minimum sample size of 10, at least 5 people will cast votes. Even this will not always generate a decisive amount of differentiation between items, but it serves as an effective minimum threshold.

Why do 5 people submit written answers?

Research has shown that the first 5 participants in a typical user test will uncover about 80% of the major issues.

We applied this principle when creating the UX Crowd feature: if 5 users provide 2 answers each to the 3 question categories (likes, dislikes, and suggestions), in theory this should surface the large majority of important issues & topics. While the list of answers won’t be exhaustive, anything left out by all 5 users is very unlikely to be a critical or high-priority issue.

How do you prevent bias when testers vote?

Several factors built into this feature ensure that the voting is as unbiased as possible:

- Most importantly, vote totals are not shown to the voters themselves. For each tester casting votes, all answers start at 0. They cannot tell which answers have already amassed lots of votes, or which ones have been unpopular.

- They also cannot see the comments left by other testers. Each person is essentially casting their votes in a vacuum, with everything aggregated together for viewing only on the researcher’s side.

- The display order of the answers is also randomized for each voter. This way any primacy bias or other effects created by the order that users see things in is balanced out.

How does the UX Crowd feature work with sprints?

The UX Crowd survey essentially “resets” each time a new test sprint is ordered. That’s not to say that the old answers and vote counts are gone – they’ll still show when viewing the prior sprint data.

However, for the testers participating in the new sprint, everything will start over with a clean slate. The first 5 testers of the new batch will submit brand new responses, and these will be the ones that then get voted on by the next testers.

This way your UX Crowd insights are not limited by what happened in prior test sprints, but can continually provide new information.

Get the UX Crowd for your user tests

Want to collect crowdsourced, vote-ranked UX insights for your own website or app? The UX Crowd feature is available on our Team and Enterprise Plans for any test with 10 or more participants.

Whether you’re testing with large or small sample sizes, Trymata has many tools for doing efficient, effective analysis of your user test results – including our psychometric surveys, UX Diagnostics suite, and more.

Sign up for a free trial to start running your own research, or talk to one of our team members today!