Can experiences be quantified?

Much of the data that UX researchers and designers engage with is qualitative: focused on stories, descriptions, emotions, and reactions. All of that is valuable for understanding how people interact with designs, but quantitative data helps us do more with that knowledge.

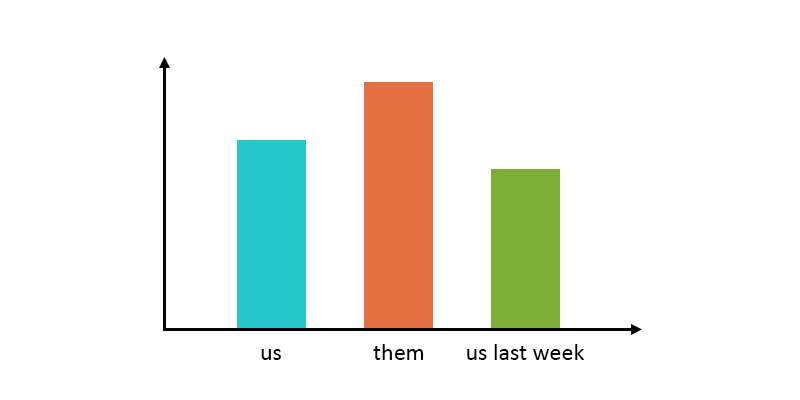

For one thing, measuring the usability of your designs lets you benchmark against past performance. Each time you iterate on your designs, you can compare your usability scores to see if the new designs are better or worse, and by how much via user testing metrics. You can also compare your performance to competitors when doing comparative usability studies to see how you rank in your industry.

A number of psychometric surveys have been developed to quantify the user experience and put a number on the usability of websites and apps. Two popular ones, which will be the topic of this post, are the System Usability Scale (SUS) and the Post-Study System Usability Questionnaire (PSSUQ).

Do more: Understanding your first remote usability testing results

SUS: The System Usability Scale

The System Usability Scale, or SUS, is a usability quantification survey created in 1986, by John Brooke at Digital Equipment Corporation (DEC, now part of Hewlett-Packard).

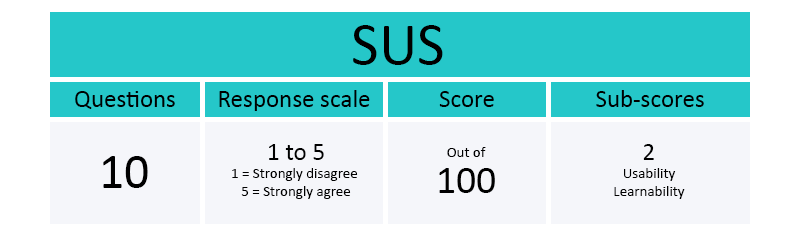

The survey: SUS can be used to quantify the usability of websites, apps, or any software or hardware that users interact with, wireframes and prototypes included. The survey, which Brooke described as a “quick and dirty” measurement tool, is just 10 questions long.

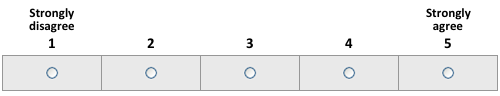

The “questions” are really statements about the website (or app, or other interactive system): for example, “I found the system unnecessarily complex.” Since SUS can be used to measure a wide variety of systems, the questions are broad and general. Testers rate how strongly they agree or disagree with each one using a 5-point Likert scale (as shown below).

The results: The end outcome of this is a score, from 0 to 100, that reflects the overall usability of the tested website or app based on the respondent’s experience. In addition, there are 2 sub-scores, derived from subsets of the 10 questions, which reflect usability and learnability. (Update: this 2-factor breakdown has later been found to not be valid.)

The SUS survey model is built-in to the Trymata usability testing platform, and is available at all plan levels. It can be added to any test with a single click – no setup required.

Read more: SUPR-Q: Another way to measure website usability

PSSUQ: The Post-Study System Usability Questionnaire

The PSSUQ is another usability quantification survey, very similar to SUS, developed at the IBM Design Center in 1992.

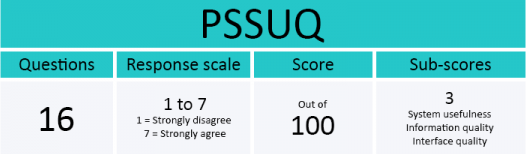

The survey: Like SUS, the survey is a series of statements describing the system, which users agree or disagree with using a Likert scale. PSSUQ was designed specifically for scenario-based usability studies, so some questions are more targeted, like “I was able to complete the tasks and scenarios quickly using this system.”

PSSUQ is significantly longer than SUS, with 16 questions in total. The answering scale is also more complex, ranging from 1 to 7 instead of 1 to 5. This allows testers to give more nuanced responses to each question.

The results: The end outcome is a score from 0 to 100, reflecting the overall usability of the website or app based on the respondent’s experience. PSSUQ also has 3 sub-scores, derived from subsets of the 16 questions, which reflect system usefulness, information quality, and interface quality.

The PSSUQ is available at our higher plan levels (Enterprise and up) on the Trymata usability testing platform. It can also be used on our 2-week free trial (along with 5 user testing results and all other Enterprise features).

SUS vs PSSUQ: Which one should I use?

Both SUS and PSSUQ are popular and respected psychometric questionnaires for measuring the user experience. Which one should you use for your usability study?

Here are 3 things to consider:

- Look at the list of questions for each survey. Is one more applicable or better suited to your study? If so, go with that one.

- Are any of the sub-scores for either survey especially interesting or relevant for your study? If you want to know about learnability, for example, choose SUS; or if you want to know about information quality, choose PSSUQ.

- Think about tester fatigue. PSSUQ is a longer and more complex survey, and therefore puts greater cognitive stress on testers. If your test already has lots of tasks or is highly complex or difficult, like with thorough ecommerce UX studies, it will probably be better to use SUS (or pare down tasks or questions elsewhere).

Beyond these factors, which one you choose is essentially a matter of personal preference and what user testing strategies or methodologies you have in place. Just make sure to stick with the same survey model for all your user testing studies, so you can benchmark performance over time and against competitors.

Reference: SUS and PSSUQ question lists

Below are the full sets of questions for each of the 2 surveys.

SUS:

- I think that I would like to use this system frequently.

- I found the system unnecessarily complex.

- I thought the system was easy to use.

- I think that I would need the support of a technical person to be able to use this system.

- I found the various functions in this system were well integrated.

- I thought there was too much inconsistency in this system.

- I would imagine that most people would learn to use this system very quickly.

- I found the system very cumbersome to use.

- I felt very confident using the system.

- I needed to learn a lot of things before I could get going with this system.

PSSUQ:

- Overall, I am satisfied with how easy it is to use this system.

- It was simple to use this system.

- I was able to complete the tasks and scenarios quickly using this system.

- I felt comfortable using this system.

- It was easy to learn to use this system.

- I believe I could become productive quickly using this system.

- The system gave error messages that clearly told me how to fix problems.

- Whenever I made a mistake using the system, I could recover easily and quickly.

- The information (such as online help, on-screen messages, and other documentation) provided with this system was clear.

- It was easy to find the information I needed.

- The information was effective in helping me complete the tasks and scenarios.

- The organization of information on the system screens was clear.

- The interface of this system was pleasant.

- I liked using the interface of this system.

- This system has all the functions and capabilities I expect it to have.

- Overall, I am satisfied with this system.